DON'T HACK THE PLATFORM ☠️💣💥

In 2022, Jan presented his tricks to hack the Java platform at Devoxx Belgium and created awareness of some of the lesser-known vulnerabilties of Java and the JVM.

Watch the talk...

At Yoink we value our diverse skills and insights to help us build better software. We build and maintain reliable software for medium and large enterprises, using modern technologies and methodologies.

At Yoink, we make it our business to learn about the methods and technologies that help us build better software, better teams and a better future. We believe the ultimate step in a learning journey is to pass it on to others. Knowledge sharing is done through trainings, meetups and talks at conferences. There are many great talks available from Yoinkees:

In 2022, Jan presented his tricks to hack the Java platform at Devoxx Belgium and created awareness of some of the lesser-known vulnerabilties of Java and the JVM.

Watch the talk...

Another day, another silver bullet. The world of software development changes so rapidly and with every new framework, there will be those that claim it to be the solution to all your problems.

Watch the talk...

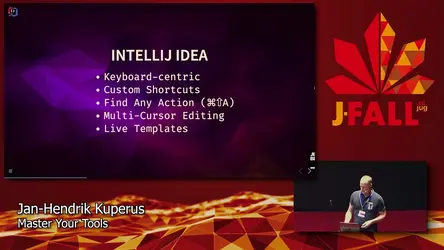

A laptop is to a developer what a toolbox is to a carpenter. This talk showcases ways to speed up a dev's workflow and inspires you to learn about the tools at your finger tips.

Watch the talk...The other way we share our thoughts and experiences are through articles on our blog. These range from technical how to's to lessons learned from projects and opinionated essays. Below is an excerpt of the most recent post. You can find more articles on our blog.

Do you have to do code reviews sometimes? I do. At my current project, we use GitHub PRs for that. Some PRs are small, and can easily be comprehended just by looking at them on the PR page, but some PRs are too large for this. The linear view that GitHub’s PR page provides provides little help to fully understand the changes made in the PR. So what do you do?

... continue reading